Understanding Machine Learning Bias

Understanding Machine Learning Bias

Machine learning (ML) is transforming industries. It helps businesses make decisions and improve processes. However, one significant challenge is machine learning bias. Understanding this concept is crucial for developing fair and effective AI systems. In this article, we will explore what machine learning bias is, its causes, its impacts, and ways to mitigate it.

1. What is Machine Learning Bias?

Machine learning bias refers to systematic errors in algorithms. These errors can lead to unfair outcomes. Essentially, bias occurs when an algorithm produces results that are prejudiced against a particular group. This issue can arise in various applications, including hiring, lending, and law enforcement.

Bias can manifest in different forms. For instance, it can affect predictions, classifications, and recommendations. Understanding the types of bias is essential for addressing the issue effectively.

2. Types of Bias in Machine Learning

There are several types of bias that can occur in machine learning:

- Data Bias: This type arises from the data used to train the model. If the training data is unrepresentative or skewed, the model will reflect those biases. For example, if a facial recognition system is trained primarily on images of light-skinned individuals, it may perform poorly on darker-skinned individuals.

- Algorithmic Bias: This occurs when the algorithm itself is designed in a way that favors certain outcomes. For instance, if an algorithm is more sensitive to specific features, it may disproportionately impact certain groups.

- Confirmation Bias: This happens when data scientists or developers have preconceived notions. They may unconsciously choose data that supports their beliefs. This bias can lead to flawed models that do not accurately represent reality.

- Measurement Bias: This type arises when the tools or methods used to collect data introduce bias. For example, using flawed survey questions can lead to inaccurate responses, affecting the training data.

3. Causes of Machine Learning Bias

Understanding the causes of machine learning bias is essential for prevention. Here are some common factors:

- Skewed Training Data: If the training dataset does not represent the entire population, the model will likely produce biased results. This is especially problematic in areas like hiring, where biased data can lead to discrimination against qualified candidates.

- Feature Selection: Choosing the wrong features to include in the model can introduce bias. If certain characteristics are prioritized, it can lead to unfair treatment of specific groups.

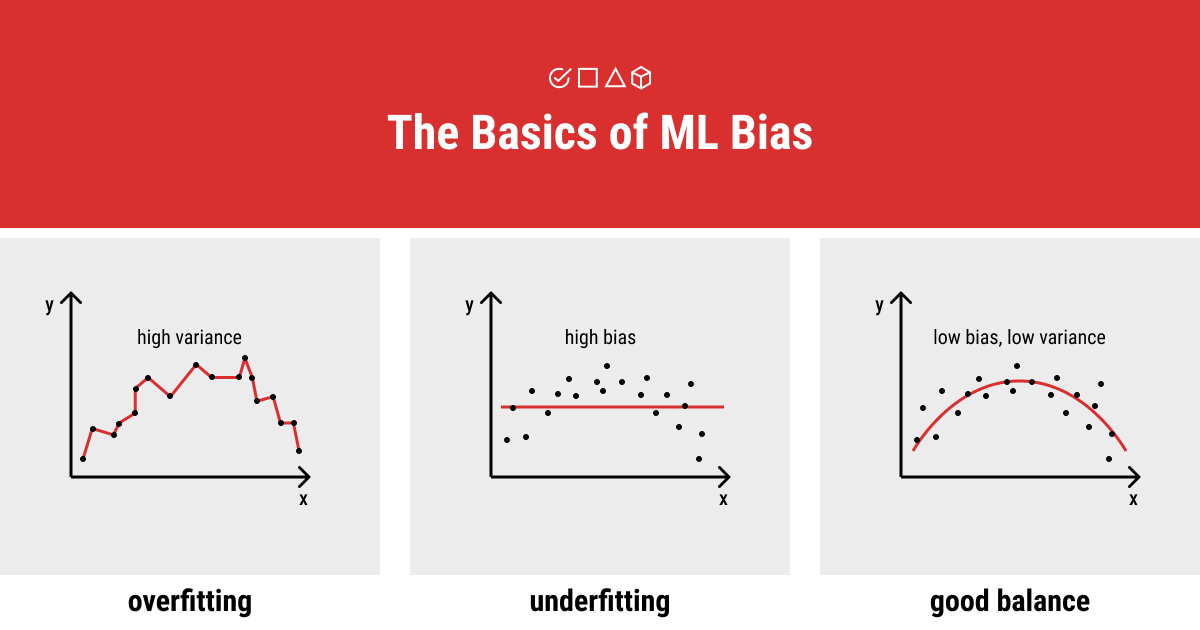

- Model Complexity: Overly complex models can lead to overfitting. This means that the model performs well on training data but poorly on new data. Such models may also exhibit biased behavior due to their sensitivity to noise in the data.

- Human Error: Data scientists may unintentionally introduce bias through their decisions. For example, if they do not adequately preprocess the data or validate their assumptions, it can lead to biased outcomes.

4. Impact of Machine Learning Bias

Machine learning bias can have serious consequences. Here are a few key impacts:

- Discrimination: Biased algorithms can perpetuate discrimination in hiring, lending, and law enforcement. For instance, an AI system used for recruiting may favor male candidates over equally qualified female candidates. This perpetuates gender inequality in the workplace.

- Loss of Trust: When biased outcomes are discovered, it can erode trust in technology. Users may feel that AI systems are not fair or transparent, leading to resistance against adopting new technologies.

- Legal Consequences: Companies that deploy biased algorithms may face legal repercussions. Discrimination lawsuits can arise if an AI system leads to unfair treatment of individuals based on race, gender, or other protected characteristics.

- Ineffective Solutions: Bias can result in ineffective solutions. For example, a healthcare AI tool may not provide accurate recommendations for certain demographic groups, undermining its effectiveness.

5. Mitigating Machine Learning Bias

Addressing machine learning bias requires proactive measures. Here are some strategies to mitigate bias:

- Diverse Training Data: Ensure that the training dataset includes diverse representations. This will help create models that perform well across different groups. Data scientists should prioritize inclusivity when selecting data sources.

- Regular Audits: Conduct regular audits of machine learning models. This can help identify biases and areas for improvement. By continuously monitoring performance, organizations can catch and address bias early.

- Bias Detection Tools: Utilize tools designed to detect and analyze bias in machine learning models. These tools can provide insights into potential biases and help developers make necessary adjustments.

- Transparency: Promote transparency in AI systems. Organizations should clearly communicate how algorithms work and what data they use. This transparency builds trust and allows for public scrutiny.

- Inclusive Design: Involve diverse teams in the development process. By incorporating different perspectives, organizations can create more equitable AI systems. Diverse teams are more likely to identify potential biases and address them proactively.

6. The Future of Machine Learning Bias

As machine learning continues to evolve, addressing bias will remain a priority. Researchers and practitioners are increasingly aware of the importance of fairness in AI. Emerging technologies, such as fairness-aware algorithms, aim to minimize bias in decision-making processes.

Moreover, regulatory frameworks may develop to govern the use of AI. Policymakers are beginning to recognize the need for standards to ensure fairness and accountability in AI systems. This shift will likely drive organizations to adopt best practices for mitigating bias.

7. Conclusion

Understanding machine learning bias is crucial for developing fair and effective AI systems. Bias can arise from various sources, including data, algorithms, and human error. Its impacts can be detrimental, leading to discrimination, loss of trust, and legal consequences.

To mitigate bias, organizations must prioritize diverse training data, conduct regular audits, and promote transparency. By taking these proactive steps, we can work towards a future where AI systems are equitable and just.

In summary, the conversation around machine learning bias is essential. As we continue to integrate AI into our lives, addressing bias will be vital for building a better, more inclusive future.